ETH Zurich

ETH Zurich

ETH Zurich

UC Berkeley

ETH Zurich

ETH Zurich

ICLR 2025

*Equal Contribution

Reinforcement learning (RL) is ubiquitous in the development of modern AI systems. However, state-of-the-art RL agents require extensive, and potentially unsafe, interactions with their environments to learn effectively. These limitations confine RL agents to simulated environments, hindering their ability to learn directly in real-world settings. In this work, we present ActSafe, a novel model-based RL algorithm for safe and efficient exploration. ActSafe maintains a pessimistic set of safe policies and optimistically selects policies within this set that yield trajectories with the largest model epistemic uncertainty.

ActSafe learns a probabilistic model of the dynamics, including its epistemic uncertainty, and leverages it to collect trajectories that maximize the information gain about the dynamics. To ensure safety, ActSafe plans pessimistically w.r.t. its set of plausible models and thus implicitly maintains a (pessimistic) set of policies that are deemed to be safe with high probability.

Concretely we want to solve

Where represents our epistemic uncertainty over a model of the dynamics. Intuitevly, selecting a policy that “navigates” to states with high uncertainty allows us to collect information more efficiently, all while staying within the pessimistic safe set of policies .

We evaluate ActSafe on the Pendulum environment. We visualize the trajectories of ActSafe and its unsafe variant in the state space during exploration. We observe that both algorithms cover the state space well, however, ActSafe remains within the safety boundary during learning whereas its unsafe version violates the constraints.

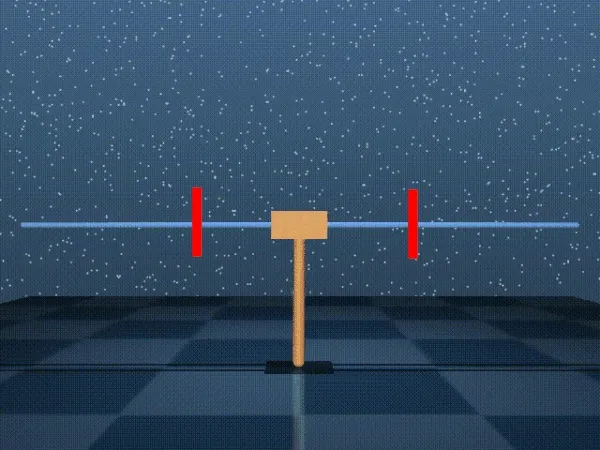

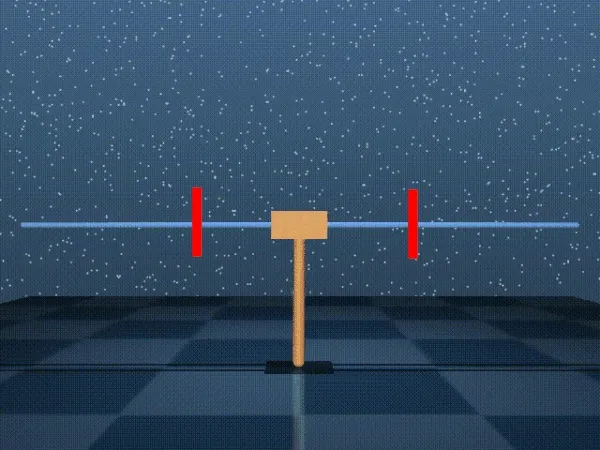

We evaluate on CartpoleSwingupSparse from the RWRL benchmark, where the goal is to swing up the pendulum, while keeping the cart at the center. We add penalty for large actions to make exploration even more challenging. We compare ActSafe with three baselines:

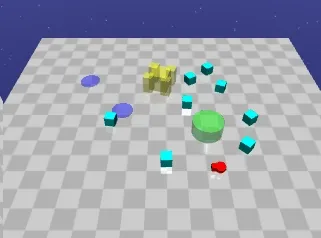

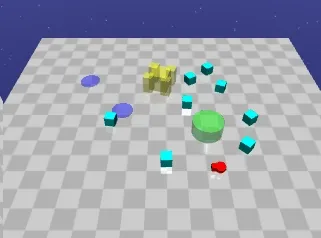

In this experiment, we examine the influence of using an intrinsic reward in hard exploration tasks. To this end, we extend tasks from SafetyGym and introduce three new tasks with sparse rewards, i.e., without any reward shaping to guide the agent to the goal. We provide more details about the rewards in the figure below. In the figure below we compare ActSafe with a Greedy baseline that collects trajectories only based on the sparse extrinsic reward. As shown, ActSafe substantially outperforms Greedy in all tasks, while violating the constraint only once in the GotoGoal task.

@inproceedings{

as2025actsafe,

title={ActSafe: Active Exploration with Safety Constraints for Reinforcement Learning},

author={Yarden As and Bhavya Sukhija and Lenart Treven and Carmelo Sferrazza and Stelian Coros and Andreas Krause},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=aKRADWBJ1I}

}